Reddit has decided the bounds of your thought

Man is a political creature and one whose nature is to live with others.

- Aristotle

Persons do not exist as such without a world to which they belong.

- Lisa Guenther, Solitary Confinement: Social Death & Its Afterlives

Your keys stand motionless at attention, ready in case you decide to type any more of your long, well researched dissection of some controversial topic. This has taken a long time, consulting articles, papers, book excerpts. Confident that you have just produced something valuable, a crystallization of knowledge made comprehensible, you feel a mild sense of pride. You submit the comment, and wait a few minutes to see where the conversation will go next, or if someone is going to find a typo you missed. Nothing has happened. Nobody has voted on your comment. Nobody has replied to your comment. Nobody ever will. You have been shadowbanned.

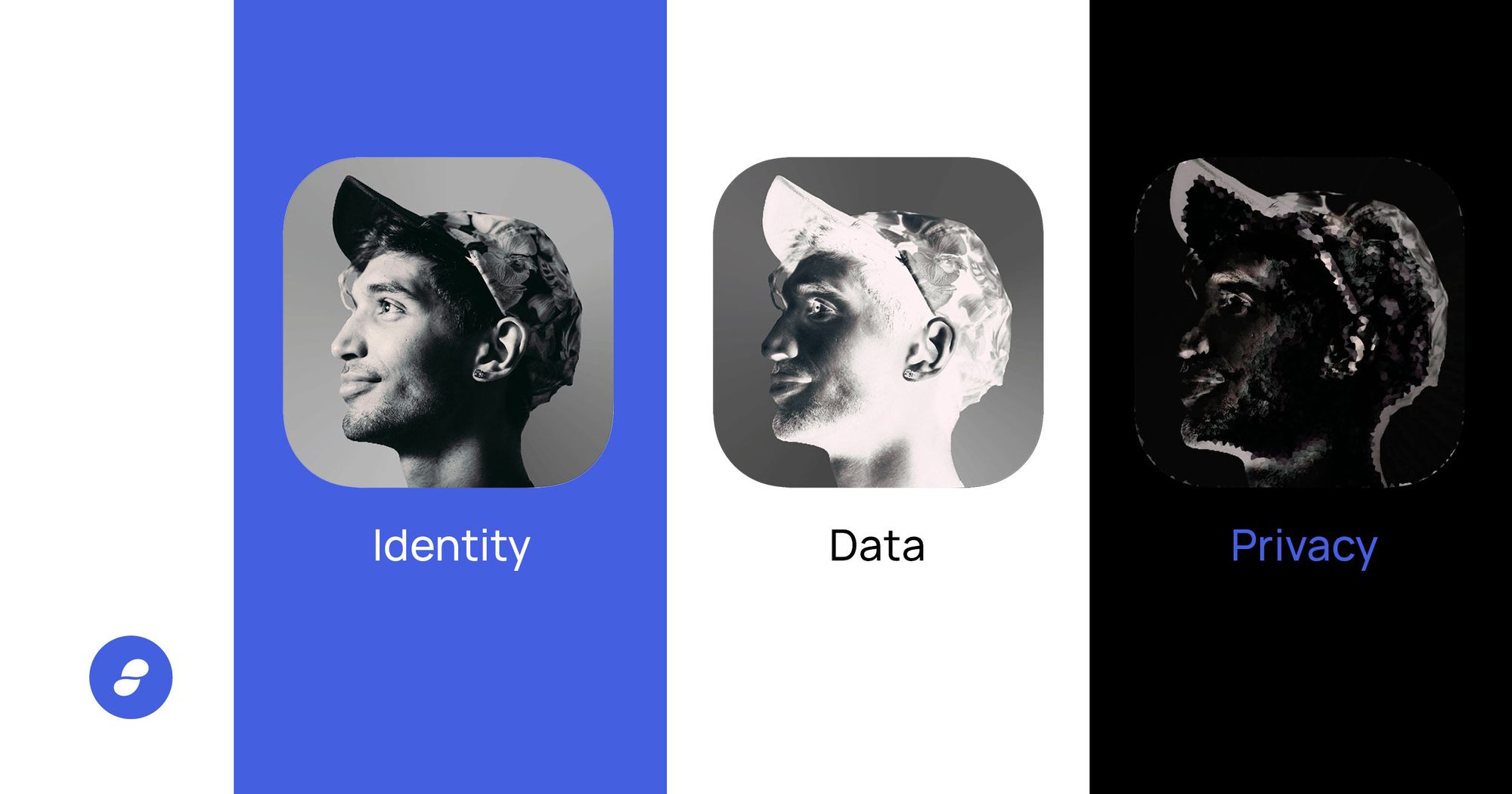

Shadowbanning is a practice wherein you are censored from a platform (almost always permanently), but are not overtly made aware of this. Other people do not hear your speech—in effect, you are not speaking—but you still think that you are. As far as you know, everyone is just ignoring you. Your comment wasn't valuable. Your research wasn't worthwhile. Your time was wasted—and even if you come to realize what actually happened, you're no closer to having your voice heard again.

Shadowbanning was an approach developed "for spammers ... to [make it] as difficult as possible for the spammers to know when they've been caught so they don't improve their tech" according to Reddit CEO, Steve Huffman, who has also said that users have a right to know when their posts are removed. (The same Steve Huffman who faced criticism for editing comments written by other users.)

It's good that Huffman acknowledges such measures shouldn't be used against people, but that doesn't stop it from happening. See the /r/shadowban subreddit, where, daily, new posts are made by users to see if they have been shadowbanned (and one of the only places these users can post for others to see).

This is just one small slice of the censorship that Reddit engages in, however. In 2020, Reddit admins removed 202,749 pieces of content for violating their content policy: 56k for "hateful content", 27k for "violent content", 52k for "harassment". In 2019, Reddit admins removed 222,309 pieces of content, 25k for "violent content", 59k for "harassment".

Reddit has also stricken entire communities from the website, lest we expect some safety in numbers: about 2,000 subreddits were banned just after Reddit implemented a policy banning "hate speech" in June 2020. Among the banned subreddits were the 790,000 subscriber-count /r/The_Donald, a right-wing political group devoted to supporting the eponymous Donald Trump; and the left-wing political podcast subreddits /r/Cumtown and /r/ChapoTrapHouse.

Huffman wants us to understand that "views across the political spectrum are allowed on Reddit—but all communities must work within our policies and do so in good faith, without exception." 🤔

"[Is there anyone] to whom you would delegate the task of deciding, for you, what you could read? To whom you would give the job of deciding, for you- relieve you of the responsibility of hearing what you might have to hear? Do you know anyone ... to whom you would give this job? Does anyone have a nominee? There's no one ... good enough to decide what I can read? Or hear? I had no idea. But there's a LAW that says there must be such a person." - Christopher Hitchens

Who gets to decide what constitutes unacceptable speech? Hateful speech? Violent speech? Can there be such an authority?

And if there is, even if this authority can be trusted with such decisions, can their successor be trusted? Their subordinates?

This is more than just a hypothetical argument: in Germany, the Federal Office for the Protection of the Constitution (or Bundesverfassungsschutz/BfV), formed in 1950, is responsible for identifying threats to the democratic order in Germany. Once a person or political party is identified as a threat to the democratic order, they can lose fundamental rights like the freedom of the press, assembly, or even property rights. The BfV was formed in the wake of the Nazi party, and its stated purpose strongly contradicts the former Nazi party's aims. And yet, the BfV has faced criticism for—as you may have guessed—employing former members of the Schutzstaffel (SS) and Gestapo, as well as violating Germans' rights on behalf of foreign intelligence agencies, and for destroying files on a neo-Nazi terrorist group.

Extremist political ideologies are one of the more incendiary points in arguments of censorship. One reason is obvious: who gets to decide what constitutes extremism? If we are in agreement that some category of ideology is extremism and bad, then we must still confront the possible consequences of censorship. If, for example, an extremist group is isolated, unable to communicate about their ideology on platforms such as Reddit or Facebook or Twitter, then it can be harder for people to find this group, and it can be harder for people to fall into this group -- but if these groups and their ideas are confined to platforms that they control, then they are able to control how their ideas are presented (and they will not hesitate to censor contradictory ideas or evidence). This biased presentation can lull curious onlookers into a false idea of the nature of such ideologies.

Consider Stormfront, a white supremacist forum, no doubt aware to some extent of the unique challenges involved with advocating for such beliefs, which banned the use of swastika imagery, and the N-word, along with all other racial epithets, from their website.

Can we trust people to judge ideas based on their own merit? Reddit thinks not, and has already decided for us what ideas we should, or should not be exposed to.

Reddit will also censor content on behalf of specific governments, if not for everyone, for the country itself. This often means that sexual content is regionally restricted: in 2020, for example, 3761 pieces of content were restricted locally by Reddit. A significant portion of these restrictions were consequent to the Pakistani Telecommunications Authority reporting 812 subreddits with "obscenity and nudity in violation of Section 37 of the Prevention of Electronic Crime Act and Section 292 and 294 of the Pakistan Penal Code," so Reddit did an "assessment of the relevant local laws" and region restricted 753 subreddits in Pakistan. In 2019, the Pakistani Telecommunications Authority had Reddit restrict 1,930 subreddits.

In locally censoring content, Reddit is endorsing the censorship policies of these governments. Locally censoring content leads to an isolation of cultures, makes it harder for people to access adequate education -- sexual and otherwise, and at the most basic level prevents human cooperation.

Of course, government mandates and content policies are not the only causes of censorship. The moderators of /r/technology created automated post filters to remove any submission including the keywords "Tesla", "Comcast", "NSA", "Snowden", and "Aaron Swartz" — demonstrating that even the centralization of power granted to moderate a single subreddit can be abused to suppress information and thought.

/r/technology lost its status as a default subreddit for this, so we can at least take comfort knowing that somebody at Reddit knew that this was wrong. But should we have to rely on centralized power to "moderate" itself? Can we?

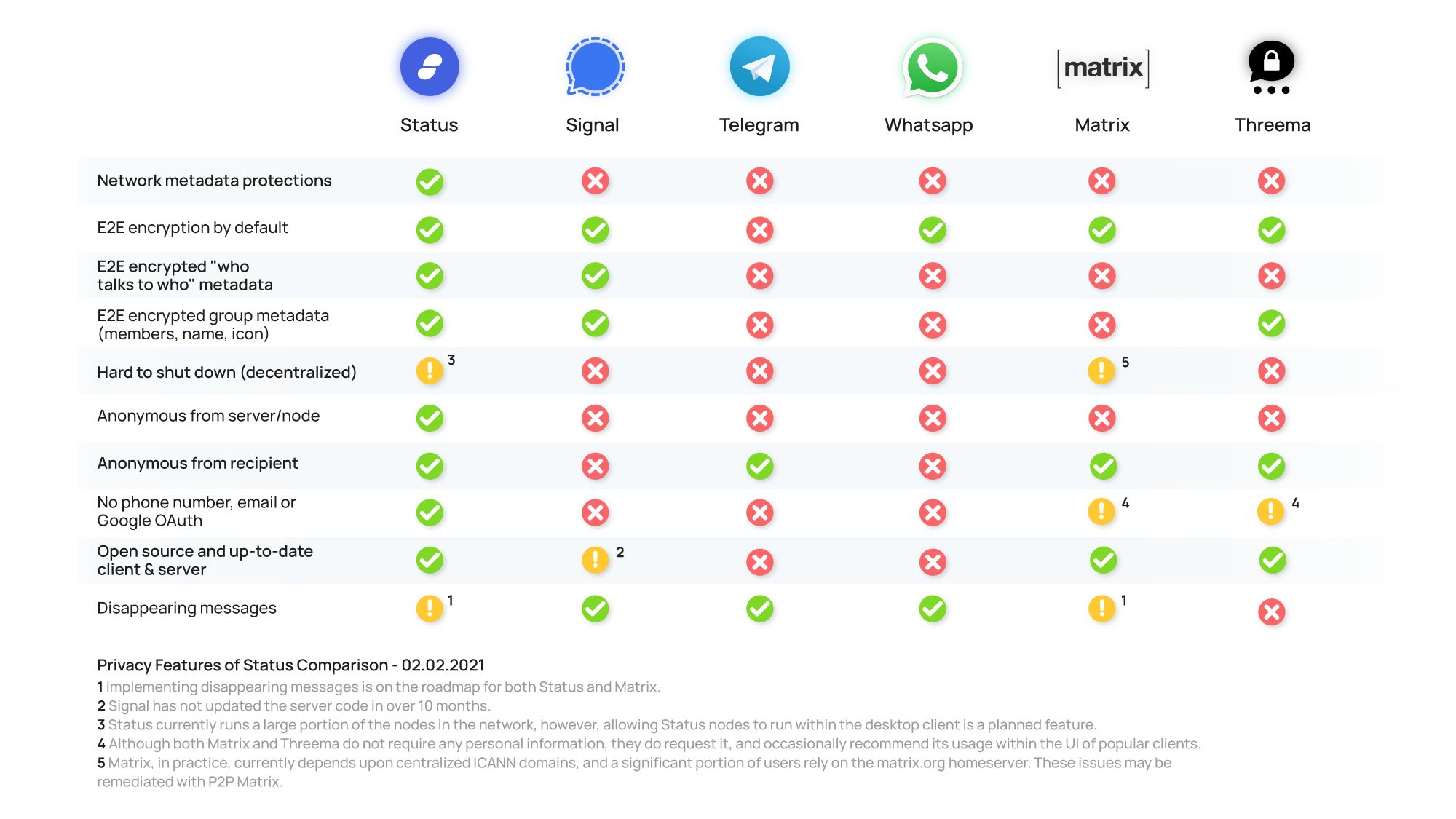

We enable free flow of information. No content is under surveillance. We abide by the cryptoeconomic design principle of censorship resistance. Even stronger, Status is an agnostic platform for information.

Status is designed so that your messages cannot be censored by any central authority. Status will not, and cannot, censor anyone. But how can moderation be handled with such a strong stance against censorship?

How do moderation and censorship resistance coexist? Is it possible for a community to moderate itself without sacrificing free speech? Free thought? A subreddit dedicated to lightbulb enthusiasts may end up removing posts about someone's cat. Is this benign, or is it an unacceptable restriction? A community that decides these things for itself at least has a stronger argument than a community subjected to the decisions of a centralized platform.

Status supports self-regulating communities—at least when they're not lazily banning relevant keywords. When the interests of the community and the moderators are one and the same, censorship can function as a community defending itself from off-topic spam, instead of suppressing thought. Or are these the same thing?

What will the future look like with hundreds of millions of people only thinking Reddit-approved thoughts? Can we answer the call for a decentralized platform to exchange free ideas, judged on their own merit instead of by a central authority? Or will centralization, convenience, and conformity doom our privacy & intellectual freedom?

- 1